Facebook, Google Get One Hour From EU to Scrub Terror Content

Yet more absurdity from Brussels, where regulators seemingly don't understand how the Internet works.

Yet more absurdity from Brussels, where regulators seemingly don’t understand how the Internet works.

CNNTech (“EU gives tech companies 1 hour to remove terrorist content“):

Europe is telling tech companies to take down terrorist content within an hour of it being flagged — or face sweeping new legislation.

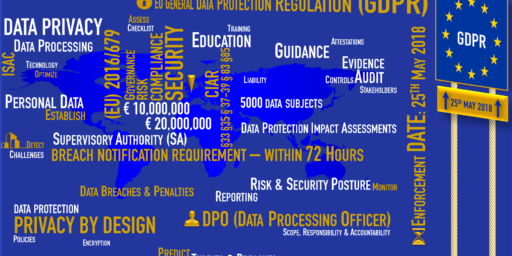

The European Union, which published a broad set of recommendations on Thursday, said that tech companies would have three months to report back on what they were doing to meet the target.“While several platforms have been removing more illegal content than ever before … we still need to react faster,” Andrus Ansip, a European Commission official who works on digital issues, said in a statement.

The Commission said that tech companies should employ people to oversee the process of reviewing and removing terrorist content.

If there is evidence that a serious criminal offense has been committed, the companies should promptly inform law enforcement.

The guidelines could form the basis of new legislation that would heap new demands on social media companies operating in Europe, where regulators have taken a tougher approach to the industry.

Facebook, Twitter, YouTube and Microsoft (MSFT) all agreed in 2016 to review and remove a majority of hate speech within 24 hours. The category includes racist, violent or illegal posts.

EDiMA, an industry association that includes Facebook (FB), YouTube parent Google (GOOGL) and Twitter (TWTR), said it was “dismayed” by the Commission’s announcement.

“Our sector accepts the urgency but needs to balance the responsibility to protect users while upholding fundamental rights — a one-hour turn-around time in such cases could harm the effectiveness of service providers’ take-down systems rather than help,” it said in a statement.

As noted here yesterday and many times in the past, Europe doesn’t view speech as a “fundamental right” in the same sense we do.

Gizmodo (“Europe to Facebook and Google: Remove Illegal Content in One Hour (We’re Serious This Time!)”) adds:

The European Union really wants tech companies to get their shit together when it comes to policing content on their platforms. On Thursday, it issued new guidelines for how companies like Twitter, Google, and Facebook should handle illegal content on its European websites: quickly, proactively, and with human oversight.

The European Commission, the EU’s executive body, recommended that tech companies scrub any illegal content—including terrorist material, child pornography, and hate speech—from their platforms within an hour of it being reported by law enforcement officials. Additionally, the Commission said it would like to see greater use of both “proactive measures, including automated detection” and humans supervisors “to avoid unintended or erroneous removal of content which is not illegal.”

It’s worth emphasizing these guidelines are strictly guidelines—for now. Tech companies are not legally bound by the new recommendations, but the Commission has vowed to monitor their effect and drop the hammer with “necessary legislation” if they fail to see the desired results.

And this isn’t the first time the Commission has tried to threaten tech giants with non-binding recommendations. In September of last year, it released guidelines for Facebook, Twitter, YouTube, and Microsoft that outlined ways in which the companies can better crack down on illegal hate speech. And in December of 2016, it dragged these same companies for not effectively complying with a code of conduct they signed in May of that year, which urged them to handle illegal hate speech within 24 hours.

Given that a voluntary agreement to remove vile content within 24 hours proved futile, it’s unclear why tech firms would now, out of the goodness of their hearts, comply with the one-hour recommendation. The Commission (like the platforms themselves) seems to be betting big on automation.

While I get the regulatory intent here, the expectation that giant tech companies with millions—if not hundreds of millions—of pages of user-generated content should squash offensive cites instantaneously is absurd even beyond free speech issues. It just doesn’t make sense technologically and seems obviously an undue burden.

At the same time, I’m experiencing a wee bit of schadenfreude. OTB has been banned from Google’s AdSense programs for years for “offensive” content. I’ve deleted some examples, even though they in no reasonable way violated the plain language of their guidelines. I submitted my latest appeal yesterday and it was promptly denied.

Example page where violation occurred: http://www.outsidethebeltway.

As stated in our program policies, we may not show Google ads on pages with content that is sexually suggestive or intended to sexually arouse. This includes, but is not limited to:

- pornographic images, videos, or games

- sexually gratifying text, images, audio, or video

- pages that provide links for or drive traffic to content that is sexually suggestive or intended to sexually arouse

I can scarcely imagine anyone outside the most oppressive regimes finding the image in question pornographic. Moreover, the link in question goes to an image page, not a post. Ironically, the post that references that picture, which was posted almost exactly seven years ago and served Google ads for years without complaint, was about free expression.

You can’t make this stuff up.

I’m old enough to remember when lawmakers in the US thought they could pull this stuff over here, not about terrorist material, child pornography, and hate speech but about “pirated” content.

I don’t understand this sentiment at all.

It’s their choice if giant tech companies want to try and make money by publishing user-generated content.

Guess what? Publishers are responsible for what they publish. How can it be right that these companies are allowed to make money off publishing content, but are simultaneously not responsible after having published material that is prima facie illegal?

I understand that tech companies like the concept of private profits, while letting the less-than-beneficial side effects of their business model be the responsibility of society at large. But there’s really no reason why this should fly.

So maybe Google and Facebook need to hire a bunch of additional staff. Tough luck. If they can’t hack it, they should be in a different line of business.

@drj:

I don’t think this is a reasonable assessment of what the companies do.

Google, in particular, is in the advertising and search businesses, mostly. They’re not “publishing” OTB’s content, for example, they’re simply making it easier for outsiders to find it. The notion that they’re responsible if some site they index advocates violence is just nuts. Hell, they’re doing the authorities a favor: Hire more cops to Google “rape” and “murder” and go after the perps.

Facebook is more complicated, I guess. But their inherent business model is allowing people to push content to their online “friends” and engage with one another while serving advertising. I don’t know they need to police the content that gets posted any more than the US Postal Service would be responsible for doing so through threats sent via First Class mail.

@James Joyner:

That doesn’t mean Google is not a publisher.

It’s comparable to the old yellow pages. Just as the yellow pages would have been legally liable for running a listing for a hit man (or a drug dealer), similarly Google can’t just show illegal content.

And just as the yellow pages had a publisher that made money off the enterprise, similarly Google makes money off its search results.

Moreover, Google’s ranking isn’t some sort of neutral reflection of the online world. Pages owned by Google and its partners get an artificial boost in ranking. If Google can decide that “friendly” sites are shown on the first page of their search results, they should also be able to decide to not show illegal content.

So yeah, Google is a publisher. Facebook even more so. Which means that they ought to be liable for what they publish.

By the way, your comparison between Facebook and the Postal Service doesn’t make much sense as the USPS a) doesn’t publish the letters it processes; b) isn’t even supposed to know what these letters say.

@drj:

I don’t understand your comparison; the Yellow Pages actively ran advertisements submitted by businesses; Google indexes the entire Internet. Yes, it prioritizes its own pages. That doesn’t mean it should have to find the handful of needles in the haystack of the Web—let alone different needles for every jurisdiction.

But USPS has a much more active role than Facebook—they actually drive out to your house and pick up the letter, manually sort it, then deliver it to another address. That’s much more involved than allowing users to set up accounts and share pictures of their kids with their “friends.”