The Polls Are Essentially Meaningless But They’re Here to Stay

They could be overstating support for Democrats. Or understating it. Or be more or less right.

NYT political analyst David Leonhardt asks, “Are the Polls Wrong Again?“

The final polls in the 2020 presidential election overstated Joe Biden’s strength, especially in a handful of states.

The polls reported that Biden had a small lead in North Carolina, but he lost the state to Donald Trump. The polls also showed Biden running comfortably ahead in Wisconsin, yet he won it by less than a percentage point. In Ohio, the polls pointed to a tight race; instead, Trump won it easily.

In each of these states — and some others — pollsters failed to reach a representative sample of voters. One factor seems to be that Republican voters are more skeptical of mainstream institutions and are less willing to respond to a survey. If that’s true, polls will often understate Republican support, until pollsters figure out how to fix the problem. (I explained the problem in more depth in a 2020 article.)

This possibility offers reason to wonder whether Democrats are really doing as well in the midterm elections as the conventional wisdom holds. Recent polls suggest that Democrats are favored to keep control of the Senate narrowly, while losing control of the House, also narrowly.

But the Democrats’ strength in the Senate campaign depends partly on their strength in some of the same states where polls exaggerated Democratic support two years ago, including the three that I mentioned above: North Carolina, Ohio and Wisconsin.

Emphasis mine.

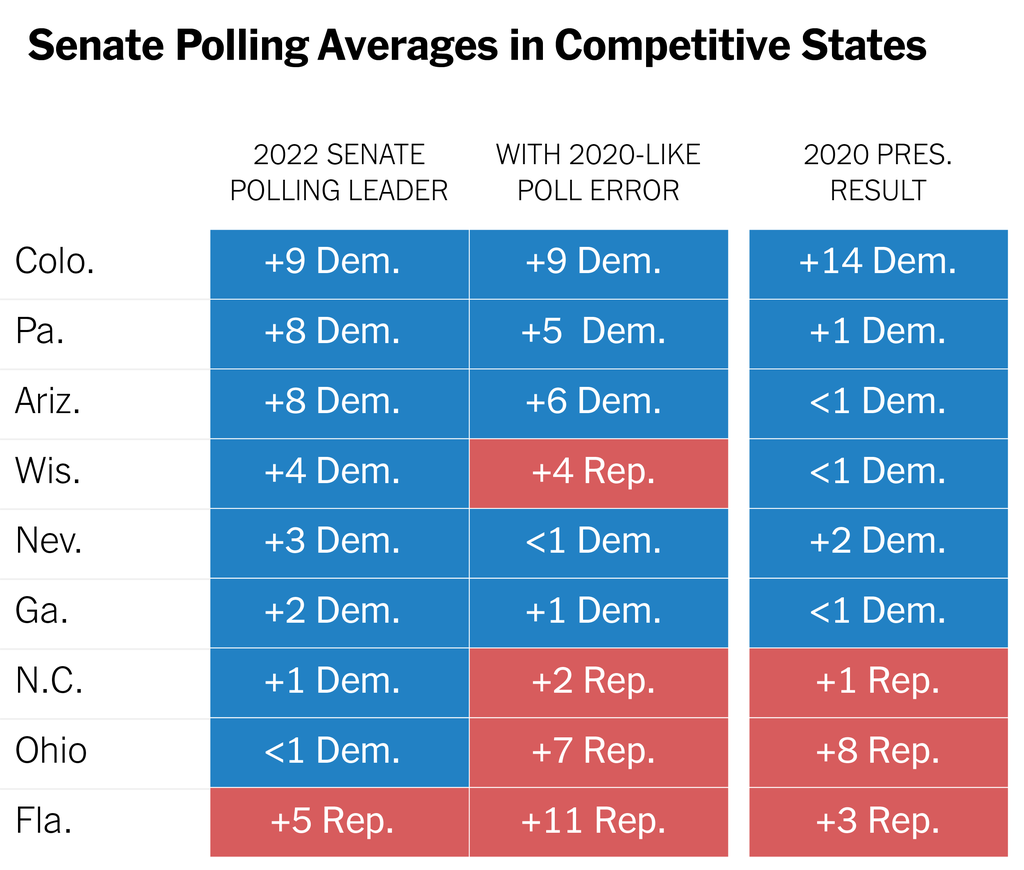

He provides this handy dandy chart showing the deltas:

He points us to a column by his colleague Nate Cohn, “Yes, the Polling Warning Signs Are Flashing Again.”

Ahead of the last presidential election, we created a website tracking the latest polls — internally, we called it a “polling diary.” Despite a tough polling cycle, one feature proved to be particularly helpful: a table showing what would happen if the 2020 polls were as “wrong” as they were in 2016, when pollsters systematically underestimated Donald J. Trump’s strength against Hillary Clinton.

Very much like the table Leonhardt used above.

We created this poll error table for a reason: Early in the 2020 cycle, we noticed that Joe Biden seemed to be outperforming Mrs. Clinton in the same places where the polls overestimated her four years earlier. That pattern didn’t necessarily mean the polls would be wrong — it could have just reflected Mr. Biden’s promised strength among white working-class voters, for instance — but it was a warning sign.

That warning sign is flashing again: Democratic Senate candidates are outrunning expectations in the same places where the polls overestimated Mr. Biden in 2020 and Mrs. Clinton in 2016.

After diving into one particular instance, he observes,

If the polls are wrong yet again, it will not be hard to explain. Most pollsters haven’t made significant methodological changes since the last election. The major polling community post-mortem declared that it was “impossible” to definitively ascertain what went wrong in the 2020 election.

The pattern of Democratic strength isn’t the only sign that the polls might still be off in similar ways. Since the Supreme Court’s Dobbs decision on abortion, some pollsters have said they’re seeing the familiar signs of nonresponse bias — when people who don’t respond to a poll are meaningfully different from those who participate — creeping back into their surveys.

Brian Stryker, a partner at Impact Research (Mr. Biden is a client), told me that his polling firm was getting “a ton of Democratic responses” in recent surveys, especially in “the familiar places” where the polls have erred in recent cycles.

None of this means the polls are destined to be as wrong as they were in 2020. Some of the polling challenges in 2020 might have since subsided, such as the greater likelihood that liberals were at home (and thus more likely to take polls) during the pandemic. And historically, it has been hard to anticipate polling error simply by looking at the error from the previous cycle. For example, the polls in 2018 weren’t so bad.

Some pollsters are making efforts to deal with the challenge. Mr. Stryker said his firm was “restricting the number of Democratic primary voters, early voters and other super-engaged Democrats” in their surveys. The New York Times/Siena College polls take similar steps.

But the pattern is worth taking seriously after what happened two years ago.

So, look, this has long been the challenge in polling. Back when telephone polling was king, the difference between the numbers shown by various polling outfits was their “likely voter screen”—the model they applied to weight the responses. If your screen is off, then your poll is off.

It’s gotten harder in recent years because it’s much easier to screen calls. And because online polling is simply a different animal. In both cases, we now have the additional problem that we’ve violated the first rule of polling: our samples are now self-selected, not random.

Anywho, Cohn then presents the same chart Leonhardt used above and observes,

The apparent Democratic edge in Senate races in Wisconsin, North Carolina and Ohio would evaporate. To take the chamber, Republicans would need any two of Georgia, Arizona, Nevada or Pennsylvania. With Democrats today well ahead in Pennsylvania and Arizona, the fight for control of the chamber would come down to very close races in Nevada and Georgia.

Regardless of who was favored, the race for Senate control would be extremely competitive. Republican control of the House would seem to be a foregone conclusion.

Leonhardt offers some hope for Democrats:

Nate is also careful to acknowledge what he doesn’t know, and he emphasizes that the polls may not be wrong this year in the same way that they were wrong in 2020. It’s even possible that pollsters are understating Democratic support this year by searching too hard for Republican voters in an effort to avoid repeating recent mistakes.

The unavoidable reality is that polling is both an art and a science, requiring hard judgments about which kinds of people are more or less likely to respond to a survey and more or less likely to vote in the fall. There are still some big mysteries about the polls’ recent tendency to underestimate Republican support.

The pattern has not been uniform across the country, for instance. In some states — such as Georgia, Nevada and Pennsylvania — the final polls have been pretty accurate lately. This inconsistency makes the problem harder to fix because pollsters can’t simply boost the Republican share everywhere.

There is also some uncertainty about whether the problem is as big when Trump is not on the ballot — and he is obviously not running for office this year. Douglas Rivers, the chief scientist of the polling firm YouGov, told me that he thought this was the case and that there is something particular about Trump that complicates polling. Similarly, Nate noted that the polls in the 2018 midterms were fairly accurate.

Finally, as Nate points out, the 2022 campaign does have two dynamics that may make it different from a normal midterm and that may help Democrats. The Supreme Court, dominated by Republican appointees, issued an unpopular decision on abortion, and Trump, unlike most defeated presidents, continues to receive a large amount of attention.

As a result, this year’s election may feel less like a referendum on the current president and more like a choice between two parties. Biden, for his part, is making this point explicitly. “Every election’s a choice,” he said recently. “My dad used to say, ‘Don’t compare me to the Almighty, Joey. Compare me to the alternative.'”

The bottom line here is that we really don’t know a hell of a lot about how the vote less than two months hence is going to go.

The obvious solution, then, is to quit spending a lot of money on polling for the purpose of generating “news” that is then analyzed and caveated to death. But, of course, that’s not going to happen because political junkies enjoy consuming these stories.

It’s much like the argument that sportswriters should quit generating, for example, preseason rankings of college football teams given how much teams change from year to year. Why, just play the games and we’ll know who’s good and who isn’t! But games are (mostly) played on Saturdays and only from September to January. What else are they going to use to generate content if not speculation?

That’s not the only reason polls exist. They are also used by the campaigns themselves. Their utility becomes easier to understand once you get past the simplistic framing of “Does it accurately predict whether a candidate will win?” Polls are mostly accurate to within a few points. The problem is that elections (especially the important ones we have our eyes on) are often decided by a few points, so even a relatively small error can cause it to point to a different winner. That was definitely the case in 2020 (where the average error by state was about 3-4 points, which is not massive from a statistical standpoint). That doesn’t make them useless as data when it comes to decisions such as where a campaign should be focusing its money and resources. If you took polls away, for instance, Dems probably wouldn’t have bothered campaigning in Arizona and Georgia (one state, I should mention, where the polls turned out very accurate), relying instead on the traditional assumption that they were red states that were a waste of time to try competing in.

Maybe that’s why polls predicting Republican wins in Kansas, NY-19, and Alaska were off?

Out of the frying pan, into the fire.

@Kylopod: is correct to point out the campaigns use the polls. And that they’re useful not for win/lose, after all, what’s the putative loser gonna do? Quit? They’re used tactically. Are we better off spending ad money stressing abortion to Blacks or the economy to Hispanics? Are the soccer mom’s really concerned about CRT? Are there more gettable undecideds in the suburbs or the city? And they design internal polling around those decisions.

The evenness of our partisan split is part of the problem. If Gallup said Reagan would beat Carter by 12 points and he only won by 10, people don’t get as upset as when they predict Hillary might win with a 3 point edge and she loses with a 2 point popular vote edge. (Actually there was some angst in 1980 over polls saying Reagan/Carter was closer than it turned out, but not like 2016.) Nate Silver made a point that weather forecasters tend to err toward wet. People who call off an event and it doesn’t rain don’t get as pissed as people who get rained on. In elections there’s no clear way to prefer to err. Silver predicts probabilities for that reason rather than binary win/lose. And he still catches spit if he says 55/45 and 45 wins. Few people, and virtually no journalists, understand probability.

Part of it is what I’ve been calling The Fallacy of Perfection. People expect polls to be correct, but it’s a business of approximations. If a poll says Hillary will win with a popular vote margin of 3% and it turns out she loses with a margin of 2, that’s really pretty good accuracy. Comey, WAPO, and NYT all piled on Hillary, confident she’d win anyway. The blame should not be on Nate Silver for saying Hillary had 2:1 odds of winning, but on Comey, WAPO, and NYT for not understanding that meant 1 chance in 3 of Trump winning. (Other pollsters generally gave Trump lower odds than Silver.)

@gVOR08:

The polls in 1980 were way worse than in 2016. The fact that 2016 was treated as a much bigger shock says something about people’s assumptions, not about the polls. The myth of the Unprecedented Polling Error of 2016 is partly based on the fact that loads of people just found the idea of Trump winning too outlandish to take seriously, and this led to confirmation bias where they viewed Clinton’s modest polling advantage as proof that she couldn’t lose, which is not actually what the polls indicated.

@Kylopod: @gVOR08: My late first wife was the COO of a major Republican polling firm. It makes good sense to spend money on the best polling you can to understand how your candidate is doing and figure out how to target spending. Focus groups are also quite helpful. Those are a very different thing, though, than the media-generated polls that are mostly for entertainment and content generation.

There is no reason to read David Leonhardt. Ever.

Each paragraph you read by him kills two of your brain cells.

@James Joyner:

Oh, come on–are you really being serious here? You actually think the polls put out by major news organizations are worse than internal campaign polls from partisan firms? You know better than to make such a claim. Or you should. In any case, this is demonstrably untrue. Internal polls are usually better than North Korean-style agitprop (“Mr. Trump, our latest survey found that you’re leading Mr. Biden by 97 points in Illinois.”), but they definitely suffer from bias on top of other methodological problems.

I think Nate Silver and the gang at 538 don’t conduct polls, rather they analyze the aggregate polling available for any given race for a particular span of time.

@Kylopod: No, my argument is simply that internal polls serve a different purpose than public-facing ones run by media companies and have different effects.

If you’re running a serious campaign, you’re hiring quality pollsters who are giving you their best assessment of the situation. Media polls vary. Some of them are basically online quizzes. Top-flight outfits like the NYT, though, hire/partner with top-notch pollsters. Indeed, MSNBC and WSJ both partner with my late wife’s firm to do their polls.

But here’s the thing: candidates and their campaign managers are more unlikely to understand what they’re consuming than the general public. If the poll is off by a couple percentage polls, they should understand that the poll is solid, indeed. But media-facing polls shape public opinion and, indeed, voters’ behavior. They drive overconfidence and non-participation. And, when they’re wrong—even if it’s well within the stated margin of error—they can be used to drive narratives of stolen elections. They are, in that sense, simply very different animals.

I do not accept “well, polls have a margin of error” as an explanation for 2020. If random variation were the issue, then we would see the errors in both directions, with D’s outperforming in some states, and R’s in others. That’s not what happened. There appeared to be a systematic bias. And that worries me a lot.

We have seen now evidence of R officials trying to find “extra votes”, right?

Voter registration is increasing in most states since the Dobbs decision. Women and younger people are registering in excess of their numbers. How many pollsters are including that in their calculations?

Hint: I don’t expect any of the middle-aged males like Leonhardt and Cohn to pay any attention at all. I will be happy to admit it if I turn out to be wrong.

@James Joyner:

But that’s an indictment of media coverage of polls, not the polls themselves.

@Kathy:

Absolutely. Did anyone here suggest Nate Silver conducts polls? I didn’t notice it. I’ve definitely run across that mistake before, but not in this thread so far.

@Jay L Gischer: Nobody’s denying the error in 2020 was systemic. But that doesn’t mean we’ll see the same error this year. Other election cycles have not shown the same pro-D bias (e.g. 2018) or even underestimated Ds (e.g. 2012). And I think a lot of people overlook how much the 2020 polls were affected by the unusual conditions of the pandemic. It’s mentioned in passing in the above article, but I think it was a major factor. For one thing, there was the massive shift to vote-by-mail, which made traditional turnout models essentially useless and, as mentioned in the article, increased the chances that Dems (who stayed at home more) were even answering pollsters. Also (and this factor is especially overlooked), Democrats, due to taking the pandemic more seriously, heavily stopped doing on-the-ground canvassing, whereas Republicans did it a lot more. This led to a turnout differential that was not likely to be detected by polls.

The article title is nonsense. Polls are not useless. Even going by Leonhardt’s table the polls would call 5 of the nine races correctly and that with a huge polling error. Clearly there is predictive use there.

This argument is akin to getting heads three times in a row and declaring coin flips useless.

Hahaha hahahahahaha!

James, you’re too honest and ideal than the world deserves. FIRST and FOREMOST– Polls are an influence tool/tactic to trigger the bandwagon instinct that is essentially undisciplined in most humans. For this purpose–they are ABSOLUTELY not useless.

Hell, they can net you a point or two alone if messaged correctly.

The age of the Technocrat is over. It will return–but with the generation of Americans that is still in the crib or on the way. The name of the game today is influence–and everything, even the trappings of Academia, are being used in this primary service. If the polling is accurate and useful for planning is secondary and cake icing to its primary purpose.

The key thing is that Trump changed nothing institutionally in a legitimate way which would work in the GOP’s long-term favor. The GOP got its ass kicked in 2012, and Trump changed that. He drew out disaffected voters in the midwest and polling missed that in both elections. But after four years what were the results? Dobbs and a media circus. The MAGA base might go in for the circus and hard-core Republicans at a state level are all-in to overrule elections. But banning abortion is a nightmare and the circus has an expiration date for the disaffected. To me, it’s possible that we are heading back, voting-wise, to where America was in 2012 right after Obama won, when all of the Republican moderates were talking about how the party has to change dramatically and broaden its appeal.

@Cheryl Rofer: I don’t think they can include it in their calculations. It should show up in the “likely voter” and “voter enthusiasm” parts of their model, but I don’t know how much I would trust a poll that tried to factor in “momentum” or tried to get creative in adapting to transient things.

@Kylopod: Yes–but the polls are generated by the media outlets for the precise purpose of serving as fodder for reporting and analysis on them.

@Tlaloc: The problem is that there seem to be sampling effects that have turned the polls into something other than random samples of the universe. We’re generating hundreds, if not thousands, of stories on the likely outcome in November based on these polls and dozens, if not hundreds, of stories fretting over whether the polls are right and in what direction they might be wrong. That’s interesting for pure political junkies but contributes to all manner of social problems.

I don’t know about US polling and all its variables.

Except that I suspect that in a country with massive regional, local and urban/suburban/nonurban divergences, any national polls need taking wit a lot of salt for relevance.

Even state ones, perhaps?

I do know that in the UK, they are massively useful IF you take the trouble to get into the details of age groups, social class, income, past patterns etc and then map that against a constituency makeup.

To work back up from there for a national forecast is very effort intensive.

And the parties spend a lot of time, and money doing it.

So do the best forecasters.

I have some quick and dirty workrounds that work OK enough for my satisfaction.

Dump all the last election returns in a spreadsheet, regionalise them, then map the polling predictions onto that and poke it with a stick.

Because in my experience the errors average out.

Doesn’t always account for the “leadership bugger factor” though.

See J. Corbyn vs B. Johnson.

@Kylopod:

Was it systemic? National polling predicted a healthy Biden win, he won the national popular vote comfortably. State polling was off in some states and accurate in others.

Has there ever been a close election where state polling was right everywhere? To @Tlaloc‘s point, polls have a margin of error for a reason. They’re not supposed to be 100% accurate 100% of the time in 100% of places.

@Modulo Myself:

Or maybe state polling did not have time to catch up to the Comey letter’s effect.

Like @Kylopod says, the prevailing narrative around 2016 polling is a myth. National polling average very accurately predicted Hillary’s popular vote win, down to the margin.

State polling tends to lag a few weeks behind national polling, and state polling did not have time to fully process the Comey letter brouhaha. After Comey’s interference, key state polls were indeed moving towards Trump. And final results for most swing state polling was within margin of error, or am I wrong on that. I don’t totally recall.

I agree these perceptions around 2016 polling are driven mostly by the belief Trump could not win, which inadvertently helped him by changing behaviors, among them Comey’s (less likely to interfered if he thought Hillary would lose), Obama’s (would not have let Russian interference slide), and the media’s (would maybe have eased up on Emailghazigatepalooza).

Perceptions around 2020 polling are in part driven by one-off pandemic disruptions we’ve forgotten, like Democrats leaving votes on the table by eschewing in-person canvassing till October and like the long, slow voting counting process.

Would we really think 2020 polls were so bad had unified Democratic control of goverment been declared on election night? Had they’re not been so many mail-votes due to COVID? Had close House races Democrats ended up losing shifted 1-3% blue with more in-person voter engagement?

Foot canvassing is not thought to have a huge effect on national races, but that is not the case downballot where, for example, in municipal races studies show it can increase turnout by ~7%.

@James Joyner:

And yet, they’re more accurate on average than internal campaign polls generated by partisan firms.

Heck, I’ve often defended Fox News polls from liberals who are quick to dismiss them based on the source. They’ve got a decent track record. That makes up for a lot.

@DK:

Unlike in 2016, the polls strongly overestimated the degree of his popular-vote win, which was about 4.45 points in the end. 538 had it at 8 points, RCP at 7.2.

Pessimistic D’s certainly have reason to look at the last 3 election cycles where the R’s outperformed the polls and be concerned for this year at this point. Optimistic D’s can point to special elections before and after Dobbs.

Which one I am depends on the day. Or hour.

@James Joyner:

I’ve always assumed that internal polls (and focus groups) were looking much more at subsets of the voters. Eg, if the strategy depends on winning over soccer moms in the suburbs, then the internal stuff will look closely at that and not at voters as a whole.

That’s the kind of thing I’d look for from my internal pollsters if I were running, anyway. I read a piece this morning that has Kemp in Georgia campaigning heavily in white rural areas with a message that looks a lot like, “Make sure your relatives are registered, and vote. Because we need enough of you to outvote the Atlanta suburbs.” He knows that the white rural voters will vote for him, but he needs turnout because he’s not doing as well as historically in the Atlanta suburbs.

@Modulo Myself:

I think it’s a little complicated. In the Trump era, there’s been a significant shift of rural and white working-class voters toward the GOP, while college-educated whites and suburban voters have shifted significantly toward the Dems. In 2018 and 2020, the net effect of all this seemed to work overall toward the Dems’ benefit, but it has also contributed to the GOP’s disproportionate advantage in the electoral system compared with the popular vote.

Now, I should mention that I’m not convinced Trump himself is the sole cause of this shift; to some extent I think he was the beneficiary of trends that have been going on since before he entered the political scene.

But putting aside the question of cause, the trends themselves do have long-term consequences that may be negatively affecting the accuracy of polls. Additionally, there’s at least one thing I believe Trump has had a strong influence on all by himself, and it’s Republican voters’ refusal to accept mainstream sources of information, calling it “fake news” when it doesn’t say what they want to believe. (This tendency predates Trump of course, but Trump made it a lot more powerful.) That probably has an effect on their response rate toward pollsters.

I think a major problem with how modern polls are communicated is that they are presented as a binary horserace with much of the uncertainty removed. For example, if you look at the current 538 or RCP listings, all they show is the binary contest with which side has an advantage by X number of points. They don’t show undecided or alternative choices in the data.

For example, just picking the most recent generic ballot poll from 538, it’s 46 Democrat, 41 GoP for +5 Democratic advantage. But that leaves 13 points in the nether (46+41=87, 100-87=13).

It’s like that with most polls – the number of people who are either undecided or otherwise didn’t specifically make a binary choice is often much greater than the partisan split. The fact that the poll aggregators mostly ignore that is deceptive, especially for people who don’t look closely at the numbers.

@Just Another Ex-Republican:

By last three cycles, do you mean 2016, 2018, and 2020? R’s did not significantly outperform their polls in 2018. There’s an NYT link in the above article which goes into some detail about this, but I couldn’t access it. I do know that the generic ballot was extremely accurate–538 had it at +8.6, and D’s ended up winning the House popular vote by 8.7. The Senate was a little sketchier–there were several big Republican upsets, though there were also several Democratic ones as well. I think overall the error in Senate races was on average more toward underestimating Republicans, but what needs to be taken into account is the extreme pro-R skew in the map that year, where a whole bunch of Democrats were defending seats in very red states, with nothing comparable on the other side. (The only Republican seat being defended in a state Hillary won was Dean Heller in purplish Nevada, who did go on to lose his race, despite leading in many of the polls.) I do think there’s a long-term trend of Republicans outperforming polls in red states; it’s much, much less true in blue and purple states.

Did the polls exaggerate Democratic support, or Biden’s support? All the examples are presidential, but the President isn’t on the ballot.

I would also note the polls got the Kansas abortion initiative wrong, so post-Dobbs may be a very different landscape. Special elections seem to be running in Democrats’ favor this cycle as well.

But overall, polls are useful for measuring movement in the polled demographic. I’m not convinced that they are capturing the right demographic, but I would trust the movement.

So people are getting used to the idea of having Senator Chronic Traumatic Encephalopathy in Georgia, despite the obvious lack of mental acuity.

Is running literally brain damaged Black people going to be a new Republican strategy in states with a large Black population? Is that the dreaded identity politics?

I’m a little curious about the breakdowns there — I expect that running a Black guy dampens the reputation of Republicans as white supremacists, but running a mentally impaired Black guy might let the white supremacists nod sagely and think “yup, sure as the sun revolves around the earth, we really are supreme, dog-gone-it… and he’s our n-clang”

@DK: Several state polls were wrong, and they were wrong in the same way, they underpredicted Republican votes. Often the delta was outside the normal margin for error.

To me the most likely explanation for that is systemic bias of some sort. There’s a dark part of me that suspects foul play. Like an extra 200,000 R votes from a few districts in FL, for instance, that I read about. I don’t recall if it was Miami or Dade County or something like that. But without evidence, I mostly let that go, but this is where my gut goes when there’s a systemic error in polling.

@Kylopod: Yes those are the years I was thinking of. I’m probably mis-remembering but as I recall a strong blue wave was expected in 2018, and it ended up being much smaller than hoped (the Senate in particular). Which in my mind translates to Republican over-performance. I’m fuzzy on the details though and could easily be wrong.

@Just Another Ex-Republican:

And right there is an excellent example of the power of false narratives. The blue wave was not smaller than expected; it was pretty much exactly as expected. 538 projected a 39-seat Democratic pickup in the House; the Dems ended up gaining 40 seats. Perhaps some Dems got high on the idea that it would be more in the territory of 50 or 60 seats, but pretty much none of the serious analysis thought so.

And the Senate was strongly expected to remain in Republican hands, though the 2-seat pickup was more on the upper end of what was expected (538 gave them a little under 50% chance of picking up 2 or more seats). Again, some Dems hoped they could flip the Senate, but most analysts saw it at best as an uphill battle.

If there was a narrative that the expected blue wave failed to materialize, it was due to the early days after Election Day when many races were still being called and the Dems looked like they had only acquired a modest House majority. It wasn’t until a few weeks later that it became clear how wide their lead had become. But a lot of people didn’t stick around to find out.

You don’t know how frustrating it is hearing so many Dems accept the idea that modern polls consistently underestimate Republicans, and it is fueled by false narratives like the one you’ve articulated here. When you have Republicans uniformly making this claim (because they refuse to admit defeat) and loads of Dems uncritically accepting it (which I suspect is a byproduct of 2016 PTSD), it creates an illusion of truth based on the bipartisan consensus. Just because both parties believe something doesn’t make it accurate. And we Dems need to be especially cautious about feeding into Republican lies.