What You Think You Know About The Polls Isn’t Necessarily True

Once again, it seems necessary to debunk some commonly believed myths about polling.

Washington Post Pollster Jon Cohen had an interesting piece in yesterday’s paper discussing some of the myths about polling that have reared their head during this election season:

1. A campaign’s internal polls are more accurate than public polls.

The message from both sides, when they’re slumping, is consistent: Campaign polls are better at assessing voters’ intentions than the polls produced by news organizations and universities.

But there is a central flaw in private polling, at least what we get to see of it: Most campaign surveys are presented with a heavy dose of spin. The goals are also different, with a premium on testing messages and anticipating the effects of strategic decisions, often with tenuous assumptions about “likely voters” that may prove wrong.

If a campaign is releasing an internal poll, it’s doing so for a specific reason. Perhaps it’s trying to push back against a set of public polls that have shown the candidate trailing or losing the support of a specific demographic group. Perhaps it’s trying to divert attention from something else that’s happening in the news cycle. Whatever the reason, there’s no good reason to trust internal polls released or leaked by the campaign.

The one caveat I’d add to this is that campaigns do a lot of internal polling that is never released to the public. They do this because they need to have their own source for where the state of the campaign is, and also to see if the message that they’ve been pursuing and to see if their opponent’s messages are resonating with the public. It doesn’t matter if the news is good or bad, a campaign adviser cannot do their job without having some idea of where the campaign stands and public polling doesn’t always answer that question for them There are the internal polls that are never released to the public, at least not during the course of the campaign, and I’d bet that there a sight more accurate than the ones that get “leaked” to the press.

2. Polls prove that the first presidential debate upended the race.

Immediately after the debate, coverage focused on polls that moved in Romney’s direction. After all, a Gallup survey showed that 72 percent of debate-watchers said Romney did a better job, the most lopsided debate readout Gallup has ever recorded.

But there is little evidence that the debate decisively moved the needle in key swing states. In six state surveys released Thursday by two well-regarded polling partnerships — NBC-Wall Street Journal-Marist and CBS-New York Times-Quinnipiac — there were virtually no shifts for either candidate compared with pre-debate polls.

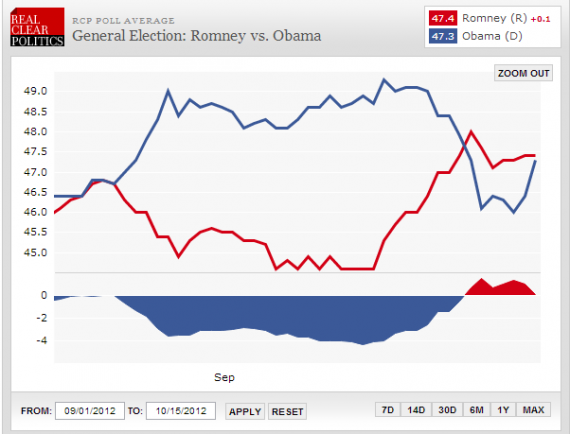

There’s no question that Romney has risen in the polls significantly since the October 3rd debate, but if you look at the national polling, there’s evidence that he was rising for at least a week before the debate. I can’t say I can figure out the reason why that might have been happening, other than the possibility that Romney’s ad campaign was just starting to kick in, but as this chart of the poll average from September 1st to today, Romney’s low point came on September 30th, several days before the debate:

The debate may have accelerated Romney’s momentum in the polls to some extent, but it didn’t cause it.

3. The best polls are those that correctly predict an election’s outcome.

Getting elections “right” is a necessary but insufficient reason to put great stock in polls. Some surveys could be well-modeled — adjusted to previous elections or to hunches, including, some surmise, tweaks to agree with other polls. (Few pollsters relish being an outlier.)

Both the Republican-leaning Rasmussen Reports and the Democratic-leaning Public Policy Polling have track records that some view favorably, but both use automated phone calls with recorded voices to collect data (“Press 1 for Obama, 2 for Romney,” etc.), revealing a basic flaw: It’s against federal law to have computers dial cellphones, so most “robopolls” ignore about 30 percent of U.S. adults.

(…)

It is always better to look at polls’ methodology, not just their results. What’s more, the “horse race” findings are often among the least important parts of a good poll. What issues motivate voters? And who is motivated to vote? These are more crucial to understanding an election than whether a candidate is up two, three or six percentage points.

I discussed the cell phone issue in a previous post, and it’s fairly clear that excluding cell phones from your call list tends to understate Democratic support. This implicates not just PPP, but also Rasmussen, another autodialer who, thanks in large part to a questionable Party ID weighting formula, tends to skew Republican more than any other pollster. The cell phone issue recently became an issue in the Presidential race when Gallup, in response to criticism from many quarters, announced that it had increased the number of cell phone only users that it included in its Daily Tracking Poll. Interestingly enough, since that announcement the poll has shown Romney with a slight lead over the President rather consistently.

4. Polls are skewed if they don’t interview equal numbers of Democrats and Republicans.

Political junkies quickly scan each poll release to see the “party ID split” — the proportions of self-identified Democrats, Republicans and independents in the sample. It makes sense: With party loyalty as strong as it is, the numbers of Democratic and Republican respondents in a survey make a huge difference. But to think, as many do, that there should be equal numbers from each party is off-base, particularly when looking at polls meant to assess the general population’s views.

Though voters seem split down the middle, there have generally been more Democrats than Republicans in the country since pollsters started tracking partisan allegiances after World War II. There is often more parity in election tallies, as Republicans tend to be more reliable voters than Democrats, but that also depends on the year.

This, of course, is the famous “skewed polls” debate, which I wrote about here and here, that was taking up much of the news cycle in the week or so before the October 3rd debate. The argument, of course, is that the polls that had been showing the President leading both on the national and state level for much of September were biased because they over-sampled Democrats. Indeed, it has become quite common for conservative bloggers to pick apart every poll to get at what its D/R/I (Democrat/Republican/Independent) breakdown happens to be. Interestingly, I haven’t seen that same level of analysis over the past two weeks for polls that showed Mitt Romney surging, but that’s beside the point at the moment. As I’ve noted in my previous posts on this topic, political scientists and most pollsters tend to agree that Party ID is a far more fluid concept than the conservative critique on this issue assumes that it is. Most particularly, there tends to be a great deal of fluctuation between identifying with a specific party and calling oneself an Independent. That’s why most pollsters don’t weight for Party ID the way they do for more immutable demographic characteristics like gender, race, religion and age. The fact that a certain percentage of women voted for Obama or McCain in 2008 according to exit polling is a far different thing from saying that a certain percentage of people who identified themselves as aa “Republican,” “Democrat,” or “Independent” voted for Obama or McCain.

Largely thanks to Scott Rasmussen and his polls that consistently favor Republicans, conservatives have been conditioned to evaluate every poll based solely on the D/R/I breakdown. They have been misled.

5. News outlets are biased in presenting polls favorable toward President Obama.

Media-bashing is at a peak among conservatives, and some on the right routinely dismiss any poll showing Obama ahead of Romney. (And some on the left reflexively scorn polls in which Romney leads.) Before recent surveys turned in Romney’s direction, one conservative blogger went so far as to set up UnskewedPolls.com, a site offering “adjusted” public polls, tilted to favor Romney by manipulating the samples to be more Republican.

In truth, news organizations have only one bias — toward news.

Well, this last one is one I have a bit of a quibble with.

I would submit that what modern news organizations are biased towards are media narratives that drive up ratings and web traffic. When the last two weeks of September started to get filled with stories about Romney’s decline and Obama pulling away, I started to notice many analysts on the cable networks pushing the idea that “the race is not over” and that Romney could still win. That’s not a surprise, really. After all, if the race really was over in September there’d be nothing for them to talk about and ratings and web traffic would drop off respectively. The debate was simply a happy coincidence that allowed the media to create yet more suspense about the outcome of the election for us to pay attention to. Now, with the second debate coming up tomorrow, the question is whether President Obama is able to make a comeback. After tomorrow night the narrative for pretty much the rest of the campaign will be set in stone, and it will be one where the outcome is in suspense until the final minute. The media doesn’t have a political bias so much as it has a bias in favor of making us watch them and drive up their ratings.

There’s one misconception that Cohen doesn’t mention, and perhaps because it hits a little too close to home. The media, and political pundits, and by extension the public that gets its information from them, have what I think is an misconception about polling. Polls aren’t meant to predict what the outcome of an election will be on Election Day. They aren’t meant to say that, in an authoritative Cronkite-esque voice, that this is the way it is. They are meant to be snapshots in time of the state of a political race. As I’ve said before, it’s far more useful to look at the trends of the polls, and especially the poll averages, than it is to obsess over whatever single poll gets released with a big “Breaking News” banner by one news outlet or another. Each outlet will promote its own poll, understandably, but that doesn’t meant that it’s the only poll that analysts and viewers need to rely upon. Unfortunately, in our current world, where two of the three cable news networks are nothing more than echo chambers for a particular political ideology, we don’t get any of that kind of analysis.

In today’s fluid electoral environment, I have found that polling authority is more and more becoming the purview of Mr. Nate Sliver.

@Blue Shark:

Silver is a good analyst, but it is as naive to put all your trust in him as it is for conservatives to put all their trust in Scott Rasmussen.

Excellent post Doug – very informative… Thank you

Doug,

About the only thing Ras and Silver have in common is that they are both men.

Doug,

There has been no decrease in pulling apart the D/R/I from what I’ve seen, especially on blogs where polling is their theme. It doesn’t matter whether Obama or Romney are ahead, looking at the internals are where micro patterns are first sensed, leading into more visible voter trends.

I still think Rasmussen is pretty accurate (more so in national than state polls), no matter what people say are his political leanings. Reviewing the last couple of election cycles, he was at the top of the polling heap in lining up his polling numbers with the eventual end game of the election.

Party identification in the poll sample does matter. Oversampling one party or the other will lead to an inaccurate result. I don’t care what these pollsters say. All the polls weigh for voter registration and voter liklihood which is just as fluid as party identification. Exit polls are supposed to be more accurate than pre-election telephone polls. They sample people who have just voted, and the news organizations pay big money so they can use them to help call elections. Yet, nationally in 2004 and earlier this year in Wisconsin, the exit polls were wildly inaccurate. After all the naval gazing and investigations into what went wrong, what was the conclusion of the exit polling company? They oversampled democrats. If a poll had a margin of error of +/-10%, it would never be taken seriously, but somehow we are supposed to believe that a poll that samples 11% more Democrats than Republicans is just a “snapshot” of the electorate. Nonsense.

@Doug Mataconis:

Not really a good analogy; Rasmussen is a pollster, and Silver is most definitely not. He does have a pretty good track record based on his reading of all (valid) polls.

Basically, the conservative take on all of this is … any poll that shows them trailing is flawed.

@Septimius:

That’s hustling backwards.

In an objective poll, it’s the poll questions themselves that determine party id, and likelihood of voting. Doing anything else is subjective. That’s not to say there aren’t pollsters that weight by ID or weight their responders by a preconceived turnout model, and that’s not to say those that do get bad results. But the fact is, when you start wanting pollsters to tweak their results based on what you think the electorate should look like, you are substituting bias for empirical evidence.

Zandar had a plausible explanation for Romney’s pre-debate rise in the polls yesterday. The pollsters had changed from questioning registered voters to likely voters. Makes a difference.

@Doug Mataconis: “Silver is a good analyst, but it is as naive to put all your trust in him as it is for conservatives to put all their trust in Scott Rasmussen. ”

Except for the whole accuracy thing.

@jan:

I find it interesting that you think Rasmussen is more credible on *national* polls versus *state* polls. My understanding is that Rasmussen’s claim has always been being able to accurately measure and predict at the state/electoral college level.

Again, national polls are nice to see, but overall far less useful in understanding or predicting what really matters in our electoral system.

Doug,

Good job calling out your two final points. Its entirely true that the media is — knowingly or not — especially concerned with an interesting story. And so polls are definitely used as a tool in the construction of that story.

And most important is to understand that polls need to be looked at in aggregate and over longer periods of time. That’s the key importance of sites like RCP.

@Septimius:

Party identification in the poll sample does matter. Oversampling one party or the other will lead to an inaccurate result. I don’t care what these pollsters say.

Because you are an idiot. Party ID in polls is a result, exactly the same as people’s intention to vote. Your objection to the party ID answers from those polled is exactly the same as if you just objected to the result of the poll.

“2% more people said they would vote for Obama over Romney? This poll is oversampling people who will vote for Obama!”

In short, your stance is that any poll that doesn’t predict what you want to happen is flawed.